Making data-informed decisions is as much about building good thinking habits and decision practices, as it is about becoming data literate. We explain why in our article Want more from data? Develop better thinking habits and decision practices.

In this article, we give you our 7-step blueprint for getting your teams to make data-informed decisions.

Learning challenges

Let’s first look at the three key learning challenges we need to address:

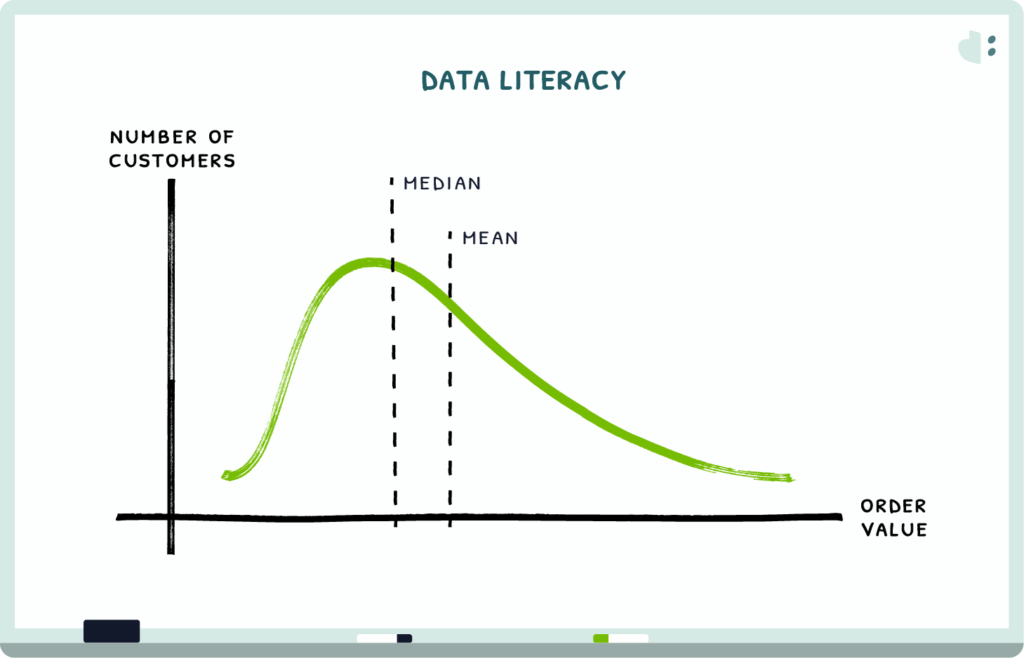

Poor data literacy. Anyone working with data needs to understand and be able to apply certain analytical and statistical concepts.

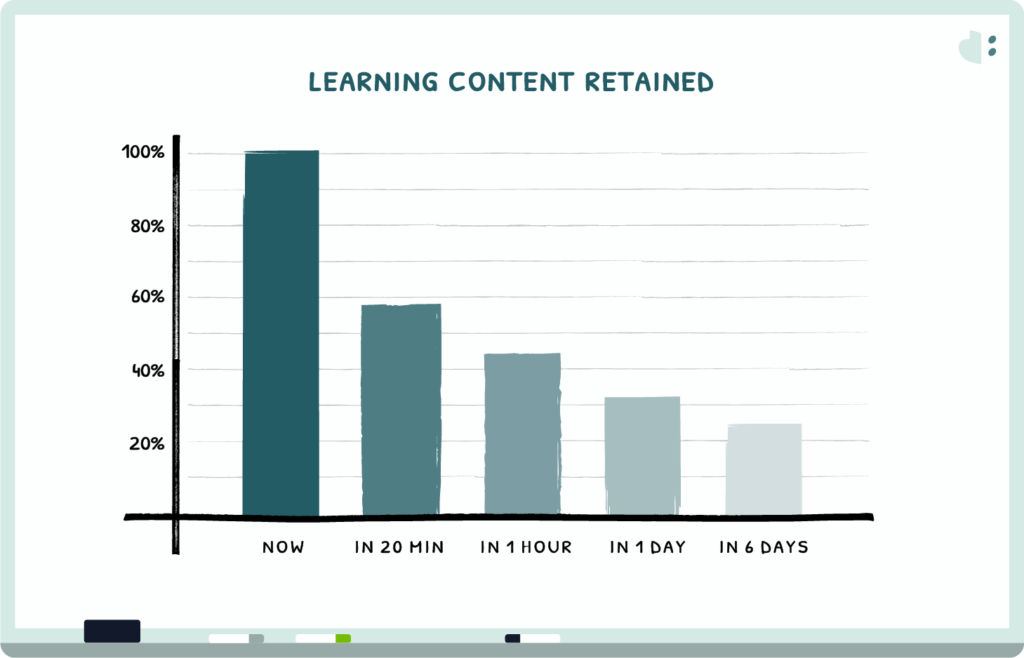

The forgetting curve. Most things learned in a single training session are poorly retained. Even if practical examples are used, often learners apply them once and then return to their usual practices.

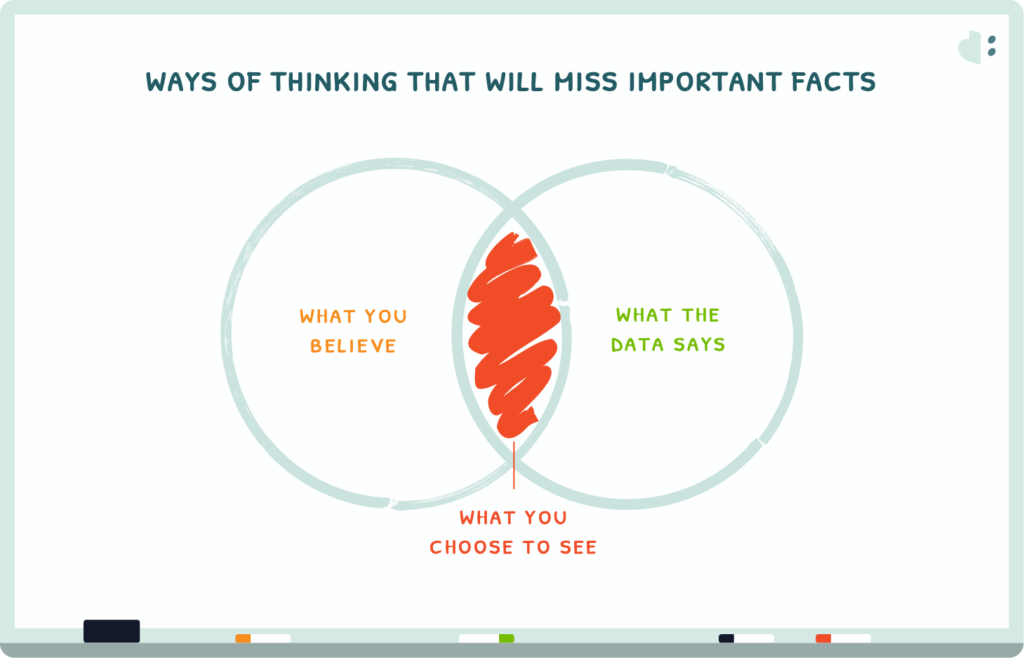

Narrow thinking that will miss important facts. You don’t clarify the question to answer, you choose the measures that are conveniently available, you only look for data that supports your ideas, etc.

7 Steps

Our 7 steps for getting your teams to make data-informed decisions address these challenges systematically. Besides data skills, you emphasize developing better practices and turning them into habits. Habits that support a more productive way of thinking and working with data.

Instead of starting with data skills you focus on how and where to improve your day-to-day work. You target specific business practices, determine how problem solving needs to change and help people make that change.

Here are the steps we will detail below:

- Identify a process or practice to improve

- Envision future decision-making behavior

- Identify promoters and detractors

- Define learning objectives and the relevant data skills

- Understand the teams’ skills and current beliefs

- Develop the learning experience

- Fine-tune the learning format

We’ve experimented with this approach for two years. It changed how people were using data and ultimately made better decisions.

Step 1 – Where can a more data-informed approach help?

As a first step identify specific decision practices that would benefit from a more data-informed approach. E.g., you may regularly ask your product teams to identify high potential growth opportunities.

Rather than relying on their feel for the market, you want them to make a data-informed case. So “assessing growth opportunities” may be a practice you want to zero in on. How do you do that today? How would you do it in a more data-informed way?

Our recommendation is to take a few steps further though. What would be the benefit of making more data-informed suggestions here?

Unless you can clearly describe the benefits, why would anyone change the way they do things today? Why would senior leaders support a sustained effort you may have to make to change how things are done?

Using the example of “assessing growth opportunities”, you can frame the improvement potential by looking at the hits and misses in the past:

- How much incremental value (revenue, customers, profitability etc.) did the best opportunities uncovered capture?

- How often did opportunities not pan out? How costly was it to pursue those?

- When were opportunities missed completely? How much potential upside was lost?

- How and to which degree could a more data-informed approach reasonably change those outcomes?

Whether for this specific example or any process you are looking to improve – it’s important to at least come up with a rough, research-backed estimate of its benefits. This will help prioritize learning and change efforts. It will also give you a baseline for assessing the success of your training efforts.

Step 2 – Who do you want to be?

Yes, you read that right. Who do you want to be? In step 1 you clarify why and what process or practice you want to change. Now you create a vision for what decision making or operations in your organization should look like.

Using our example of assessing growth opportunities, what are the decision points? What type of data or analysis would you expect to be brought forward at each point? How should the conversations unfold?

When you are looking to build new habits in your personal life, it’s more important to develop a clear vision of who you want to become than what you want to achieve. Apply this to the organizational world. Paint a vivid picture of how you want your teams to operate and which behaviors you want to see rather than just establishing goals.

Step 3 – Data skills aside, why can’t you get there today?

Now that you described how you want your teams to operate, identify the promoters and detractors for getting there. What would make it easier to adopt the behaviors you want to see (promoters)? What stands in the way (detractors)?

Create a promoter:

If you want your team to ask critical questions of whatever situation you asked them to assess, how can you reward good questions vs. just good solutions?

Going back to our example of assessing opportunities – could identifying key questions be part of a review process? Be a formal stage gate for a project to advance?

Remove a detractor:

How do decision makers respond to data-informed recommendations? If they are skeptical, do they simply overrule the recommendation based on their intuition or do they lay out specific concerns?

The latter approach gives the team a chance to research those concerns and disprove them or revise their recommendation. You encourage a data-oriented approach from the top down.

By assessing and dealing with promoters and detractors you can create an environment in which the changes you are hoping to see can succeed.

Step 4 – Which data skills are needed?

Based on the process or practice you want to improve (Step 1) and how you envision decisions to be made (Step 2), you should be able to identify specific types of problems your team needs to solve using data.

This is about breaking down the bigger task into specific abilities or learning objectives. For each of those learning objectives you can then define the knowledge, skills and practices needed.

Here is what this could look like for our example, wanting to get better at assessing growth opportunities:

We suggest you focus equally on ways of thinking about a problem, so called concepts or frameworks (break-even analysis, critical assumptions), and technical skills (cause-and-effect analysis, calculating expected value).

For technical skills, it’s more important to understand the methods and when they may be useful or not, than mastering them. Especially if your teams collaborate with analysts who can bring the number-crunching power to the table.

Step 5 – What’s the skill and attitude gap?

Before building out a learning program, there’s one more step to take. In step 3 we looked at organizational promoters and detractors. Just the same, you want to understand the current skill level and problem-solving culture of the individuals and teams.

What is their background? How would they solve the types of problems you laid out in the previous step? What would happen if you’d ask them to address those in a more data-oriented way?

What are their attitudes towards using data? How does how they think about their work affect how they use data? What are common misconceptions?

When you know where people are coming from, the learning experience you create becomes a bridge to the beliefs and behaviors you would like to see.

Step 6 – Design an action learning experience

In this step you bring everything from the previous steps together. You design a learning experience that (1) builds skills on the job and (2) fosters the practices you want to see.

We call it a learning “experience” as it includes how individuals and teams will put the newly developed skills to work. It’s not just about how they will absorb new ideas or learn new concepts and techniques.

For building the skills, you have many options – from online materials to workshops held by external companies to in-house experts teaching skills. Whatever you choose, make sure people bring their own work or projects and apply what is taught right away.

For promoting new practices and habits, let’s use the example of assessing growth opportunities again:

- To encourage a more robust, data-informed discussion, you may give those projects dedicated time during monthly business reviews. This is one of the “promoters” you identified in one of the previous steps.

- If you set up stage gates for assessing projects, you may have a coached prep session one week before each stage gate.

- You may run a series of facilitated post-mortems with teams or individuals. In those they will debrief each other on what went well and what they found challenging when arguing with data.

The goal is to create as many mutually reinforcing learning opportunities as possible. Using new skills in real-world situations gives you instant, relevant feedback, which is critical to habit development.

Step 7 – Finetuning the learning format

Lastly, you will want to finetune the learning format. Your Learning and Development partners will have plenty of ideas, beginning with considering the learning culture of your organization.

For data and analytics skills, consider self-discovery, rather than having someone lecture. You can explore many data-related concepts through a mix of online sources, workshops and guided questions related to projects at hand. You can deepen the understanding of core concepts in facilitated group discussions.

Framing a problem or thought processes, on the other hand, are practices you learn more effectively in the flow of work, working on a current project.

Importantly, you want to pair learners with an experienced thought partner to build confidence. Especially when situations don’t quite match what was in the book or what was taught in the workshop.

Whether you rely on data and analytics translators in-house, involve program alumni as mentors or hire someone with operational experience as your “data coach”, think of this as your quality assurance effort. It’s the difference between learning concepts in theory and applying them successfully, and ultimately, changing habits.

Start small, scale up what works

Test the comprehensive approach sketched out above on a small scale. Start with one or a few teams. Pick a particular business process. See if a thoughtfully designed learning experience delivers the results you are looking for.

Processes or practices to improve shouldn’t be hard to find. Developing a promotional strategy, assessing growth opportunities, targeting marketing campaigns, automating procurement decisions, evaluating the success of grant projects, or creating data-informed policy interventions – you probably already have a list.

These days, you will be hard pressed to find an area or decision for which no data exists. As long as you can make a clear case for more data-informed decisions, you have something to work with.

Once you’ve hit on a learning experience that works for your organization, build from there. This approach may serve you better than a top-down data literacy program. Data-literacy programs typically don’t commit to specific changes in decision making or to process efficiencies. And they can’t, unless they are delivered by people with the necessary business or operations experience.

Don’t just take from us

A recent McKinsey case study shows the value of a comprehensive approach. It chronicles how they worked with blast furnace operators to use analytics to improve a steel plant’s output. Besides helping workers understand how to work with data they changed practices and habits.

They realized that the people who were used to tuning the machinery based on heuristics and experience had to build up “a new belief system”. They had to achieve a cultural shift. As one operations manager is quoted: “With analytics models, people have become more data oriented. Instead of debating the right answer, they can dig into the data and find it, and create an analytics model to provide decision support.”

We can help

See examples of how Data Brave works with teams to make more data-informed decisions and use data to scale processes. if you need help building or delivering a learning experience that changes how your teams work with data.